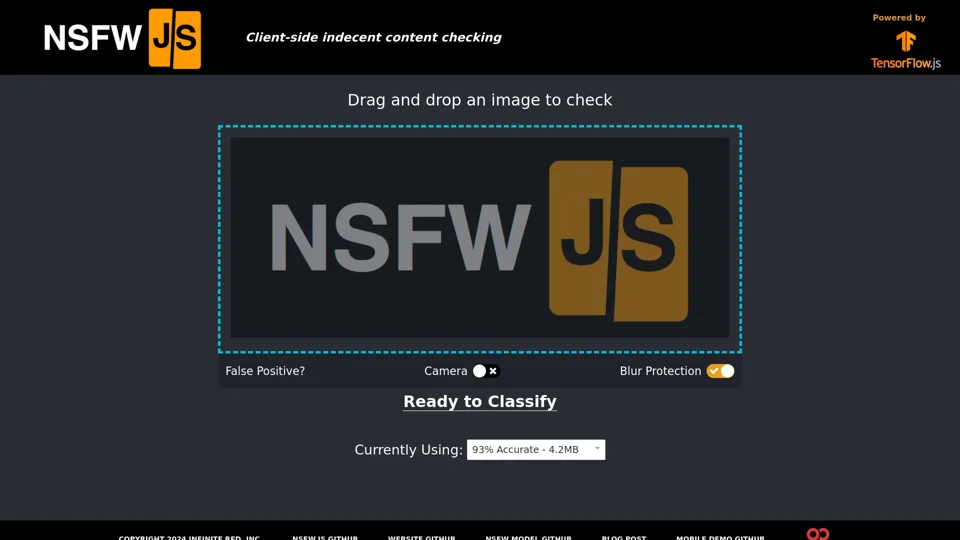

What is NSFW JS?

NSFW JS is a client-side indecent content checking tool powered by a machine learning model. It allows users to check images for NSFW (Not Safe For Work) content by simply dragging and dropping the image into the tool.

Features of NSFW JS

-

Client-side checking: NSFW JS checks images on the client-side, ensuring that no images are sent to a server for processing.

-

Drag and drop functionality: Users can easily check images by dragging and dropping them into the tool.

-

False positive reporting: Users can report false positives to help improve the accuracy of the model.

-

Blur protection: NSFW JS includes blur protection to prevent accidental exposure to NSFW content.

-

High accuracy: The model is 93% accurate and has been trained on a large dataset.

How to Use NSFW JS

- Drag and drop an image into the NSFW JS tool.

- The tool will analyze the image and provide a classification.

- If the image is classified as NSFW, it will be blurred to prevent accidental exposure.

- Users can report false positives to help improve the accuracy of the model.

Pricing

NSFW JS is an open-source tool, and its use is free.

Helpful Tips

-

Use NSFW JS for content moderation: NSFW JS can be used to moderate user-generated content and prevent the spread of NSFW material.

-

Improve the model: Users can contribute to the improvement of the model by reporting false positives.

-

Integrate with existing applications: NSFW JS can be integrated with existing applications to provide a safer user experience.

Frequently Asked Questions

-

What is the accuracy of NSFW JS?: NSFW JS is 93% accurate.

-

How does NSFW JS work?: NSFW JS uses a machine learning model to analyze images and classify them as NSFW or SFW.

-

Is NSFW JS free?: Yes, NSFW JS is an open-source tool and its use is free.

-

Can I report false positives?: Yes, users can report false positives to help improve the accuracy of the model.